- Сб. Апр 20th, 2024

Последняя запись

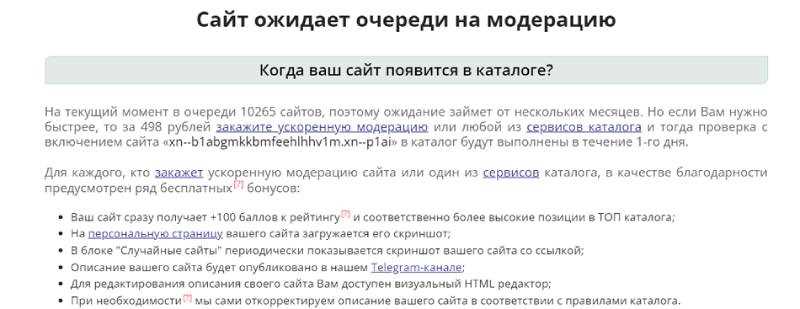

Регистрация в каталогах статей или в каталогах сайтов? Что выбрать?

Регистрация в каталогах статей и регистрация в каталогах сайтов — это два популярных способа продвижения сайта в поисковых системах. Они позволяют улучшить видимость сайта в поисковой выдаче, привлечь новых посетителей…

Секреты успешного написания рекламного объявления

Реклама имеет огромное значение для любого бизнеса. Она помогает привлечь новых клиентов, повысить узнаваемость бренда и увеличить продажи. Одним из эффективных инструментов рекламы является написание рекламных объявлений. Но как правильно…

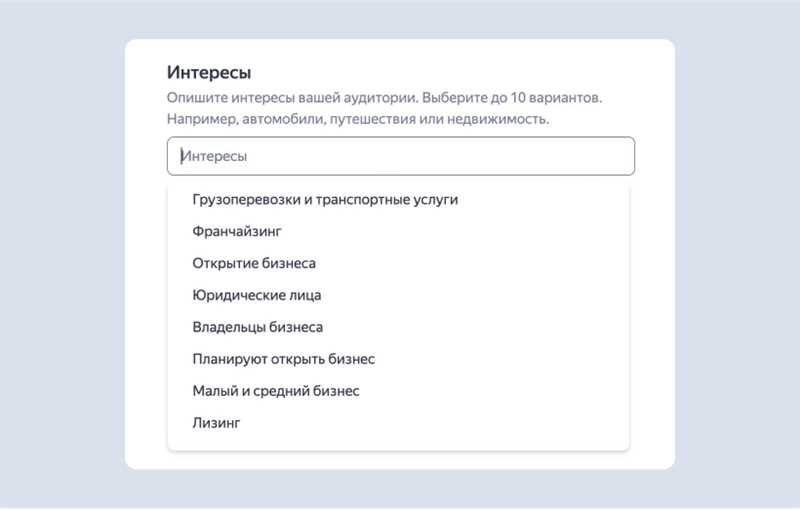

Google Ads и его конкуренты — анализ и стратегии переговоров

Google Ads является одним из самых популярных и эффективных инструментов для интернет-рекламы, позволяющим рекламодателям достигать своей целевой аудитории и повышать узнаваемость своего бренда. Однако, в данной сфере существует также множество…

Виды рекламы в интернете — какая типология современная — инфографика

Реклама в интернете – один из наиболее эффективных способов продвижения товаров и услуг. Она предоставляет массу возможностей для привлечения внимания аудитории и достижения поставленных целей. Однако с появлением новых технологий…

10 ресурсов, где можно бесплатно скачать иконки для сайта

Иконки и пиктограммы играют важную роль в веб-дизайне, помогая сделать пользовательский интерфейс более понятным и интуитивно понятным. Однако, поиск качественных иконок может оказаться сложной задачей, особенно если вы не хотите…

Как добавить магазин в Яндекс.Маркет — инструкция и лайфхаки

Яндекс.Маркет — одна из самых популярных интернет-площадок для покупки товаров и услуг в России. Он предоставляет возможность магазинам продавать свои товары и привлекать новых клиентов. Если вы хотите добавить свой…

Периодическая таблица факторов ранжирования 2015

Периодическая таблица факторов ранжирования 2015 – это инструмент, который используется для определения и сортировки важных факторов, влияющих на ранжирование сайтов в поисковых системах. Эта таблица разработана специалистами по поисковой оптимизации…

Как влияет съем позиций сайта на его рейтинг и посещаемость

Съем позиций сайта – это процесс изменения рейтинга и позиции сайта в поисковой выдаче. Когда сайт занимает высокие позиции, он легче находится пользователями и получает больше трафика. Однако, иногда могут…

Трагедия в VK — подробности гибели двух топ-менеджеров

VKontakte, одна из самых популярных социальных сетей в России, вновь оказалась в центре внимания. На этот раз причиной стало трагическое происшествие, которое унесло жизни двух высокопоставленных сотрудников компании. Подробности этой…

20 инструментов для проверки ссылочной массы сайта

Ссылочная масса сайта является одним из важных факторов, влияющих на его ранжирование в поисковых системах. Чем больше качественных и релевантных ссылок ведет на ваш сайт, тем выше его авторитет в…